Artificial Intelligence

The scenario drawn in the article “Motivation” seems to be a quite surrealistic one, but actually this sort of vision has been on mind of researchers of all kinds for centuries.

The first evidence of people theorizing about machines acting autonomously in favour of humans can be traced back to 400 B.C. and also one of the most famous all-round talents of human kind Leonardo Da Vinci developed a humanoid robot able to move its limbs which were inspired by the human anatomy. The first robot executing a task that before only humans were able to do was the “writer” of Jaquet-Droz. In short, the machine was programmable to write whole sentences the user desired, what was quite an advancement in that time.

Besides these (and the other following) more mechanical implementations to realize the vision of autonomous acting machines, there haven’t been really successful improvements on the simulation of a sophisticated form of intelligence in these machines before the 20s century.

In the recent past though, famous theorists such as Alan Turing worked on this implementation of an artificial intelligence (AI) in such (data processing) systems (e.g. machine translation) and until today major advancements have been made in several fields (e.g. image recognition, autonomous driving, gaming intelligences) to realize the old vision of autonomous machines.

But to really dive into the topic, the question arises “What is AI”?

Definition

Films such as iRobot (2014) or other fictional-cultural artefacts (e.g. books) draw a quite homologous picture of AI: In future, human beings are confronted with systems which exhibit a sort of intelligence which is alike or even more sophisticated as the human one.

Without going too deep into the fact that all these artefacts cover indirectly or directly the threat arising from a (super-) human artificial intelligence, the understanding of AI provided by these opuses fits for a general grasp of the topic quite well.

As a rough definition, AI can be seen as an intelligence of machines or systems enabling them to think, act, live and sometimes even feel like humans powered by progresses made in scientific research encompassing multiple disciplines such as applied statistics, computer science, psychology and more.

Talking about AI, there persists sometimes a little confusion about the terminology. While some are using AI, Machine Learning and Deep Learning interchangeably, others are thinking of whole intelligent cohesive systems/applications when talking about AI.

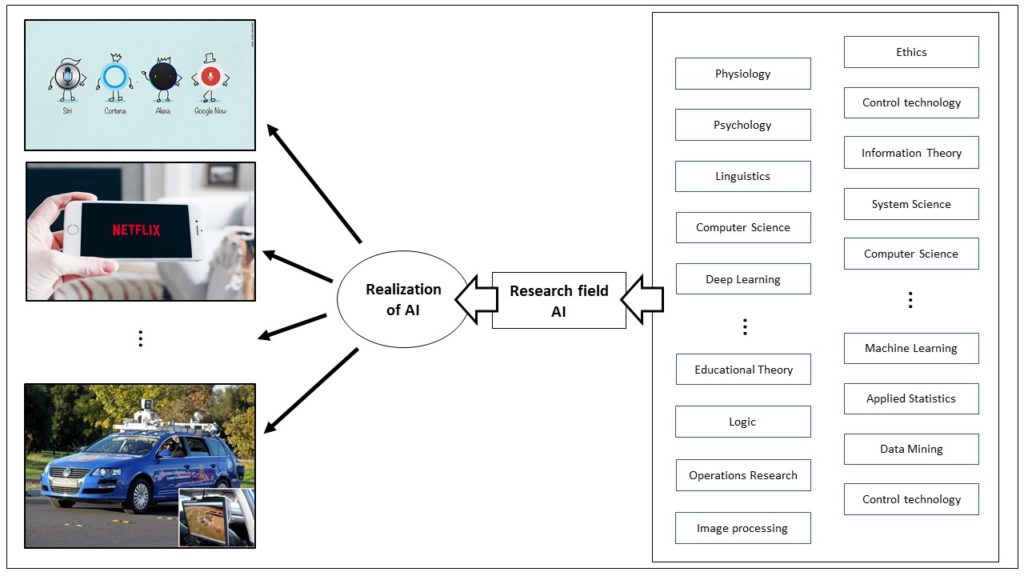

Therefore, it is important to ensure a clear and common understanding of the terminology as well as the origins of the field. As you can observe on the figure above, you can separate first of all the realization of AI and the research field AI.

The realization of AI can be for example your Amazon Echo Dot which decrypts all the words you say or, to be precise, the sound waves of these words, and acts after data processing in e.g. a neural network upon this information. Another example would be your Netflix or Spotify account which observes your film or music preferences to propose via a recommender system new films or songs to you based on the past. Another prominent example is autonomous driving. The car collects through cameras and sensors as much as possible data around itself and e.g. a convolutional neural network classifies every millisecond the objects surrounding the vehicle to drive autonomously.

To enable machines to act this way, the research field AI delivers the necessary capabilities or rather the concepts to build these capabilities. The field AI has emerged, as you will see in the following historical overview, in the middle of the 20th century. AI is a very large, interdisciplinary research field with several influences.

In general, it can be seen as a branch of computer science, but in fact it is embracing scientific fields such as applied statistics, Machine Learning, operation research, control technology, psychology, linguistics and other provide lots of valuable concepts and theories to advance the field and to enable the intelligent, autonomously behaviour of machines in complex, changing environments.

Maturity Levels of AI

In order to distinguish the different forms of AI, it is possible to consider the so-called maturity levels of AI:

Weak AI:

- Artificial Narrow Intelligence (ANI)

Strong AI:

- Artificial General Intelligence (AGI)

- Artificial Super Intelligence (ASI)

In the case of Artificial Narrow Intelligence (ANI), the system gets a specific, single task done in a smart manner, for example speech recognition or autonomous driving. It can’t improve itself autonomously and learns with the amount of data its users are providing. It can be categorized as being “weak AI”. Representatives of this scientific current say that the fact that intelligence can be modelled within a machine to enable smart behaviour doesn’t mean that this machine is literately as intelligent as a human being.

The second class of AI is the Artificial General Intelligence (AGI) which can be classified being a strong AI. The system is able to execute a variety of tasks autonomously and can gain autonomously additional technics or knowledge to do the tasks even better. A famous example for AGI would be the AI AlphaGo from DeepMind which beat in 2017 the Pro-Gamer in Go Mr. Ke Jie.

The last category of AI is Artificial Super Intelligence (ASI). This category of AI surpasses human intelligence in every field and learns, combines, explores knowledge in a highly efficient super-human manner. With todays instruments and methods, it’s generally not possible to reach such a maturity status, but cognitive system can be a promising field for further exploration to reach this level of maturity.

To give a clear working definition of AI for this website, we can use the following:

AI refers to the studies and the realization of capabilities in machines which enable them to adapt, react, interact and shape their environment in an intelligent, human-like manner using concepts and methodologies of several, interdisciplinary fields such as Machine Learning, Computer Science, Linguistics or Psychology. Key drivers of AI are computing power, the suitable algorithms and essentially the availability of relevant data.

After having laid the foundation for a clear understanding for the topic the next section gives an overview of the history of AI.

18 th Century

foundations for AI

- Bayes, LaPlace and Markov lay important foundation for further development in AI

- Cauchy develops gradient descent algorithm

1943

First work on AI

Warren McCulloch & Walter Pitts publish concept on artificial neurons: Any computable function can be calculated by network of connected neurons

1947

Alan Turing gives first Lecture on AI

1949

Hebbian Learning

Donald Hebb publishes his work on an updating rule in Neuronal Networks which is still relevant today

1950

Turing’s influencial work and first Neural Network machine

- „Computing Machinery and Intelligence“ of Alan Turing sheds firstly light on the famous Turing Test, Machine Learning, reinforcement learning and genetic algorithms

- Marvin L. Minsky creates the Stochastic Neural Analog Reinforcement Calculator (SNARC) in Princeton using 3000 vacuum tubes as well as automatic pilot mechanism from a B-24 bomber

Summer 1956

The birth of AI

- McCarthy, Minsky, Shannon and Rochester invite the most relevant researcher at this time interested in automated research to a two month workshop in Dartsmouth: On this workshop the name AI firstly appears

- On this researcher Newell & Simon present the „Logic Theorist“, the first AI program mimicing the problem solving skills of human beings

1958

First AI programming language from the MIT

- McCarthy develops LISP, a high-level programming language designed for AI predominaning for the next 30 years

Early 60ies

AI research flourishes

- B. Widrow improves Hebb’s learning, his networks call Adalines

- Winograd / Cowan present concept increasing robustness & parallelism

- F.Rosenblatt publishes his work on the famous perceptron

- General Problem Solver as the first program imitating human thinking approach

- Foundation of AI-Lab in Stanford

1966

Eliza & AI Winter

- „Eliza“, a program developed by Weizenbaum, is able to respond and interact in human natural language

- US-Government cancels funding for academic translation project.

- AI research finds itself disillusioned

1967

k-means

MacQueen developed and coined the k-means algorithms

1969

Back-propagation learning algorithms firstly appeared

1976

AI in disease recognition

Identification of infectuous diseases with the help of Mycin (expert system, Shortliffe & Buchanan)

1981

Japan’s AI investment

Japan launches fifth generation project. The project pursues to build intelligent machines programmed in prolog and is expanded to 10 years

1986

Research on AI regaining strength

- New advancement in Neuronal Nets through renaissance of back-propagation which was already explored in 1969

- multiple companies use specific narrow AI programs to optimize their processeses

1988

AI & probability- & Decision Theory

new acceptance for probability & decision theory in the field of AI arised through the work of Pearls (1988) and Cheeseman (1985)

1990

First appearance of the concept of Boosting in Shapire (1990)

1993

Worldwide RoboCup-competition

Competition to build a autonomous soccer-playing robot

1995

Support Vector Machines Algorithm is developped

1997

AI masters chess

IBM Deep Blue beats chess champion Kasparov

2003

RoboCup Championship

Within the RoboCup Championship individually constructed and programmed autonomous robots are playing soccer against each other

2007

Leading experts raise concerns

McCarthy, Minsky, Nilsson & Winston share concerns about the current development of the field: Much effort is invested at that moment in specialized tasks such as autonomous driving, but less effort in the development of human level intelligence

2008

concept of t-SNE algorithm published & AGI firstly mentionned

- Van der Maaten & Hinton from Google publish advanced cluster algorithm t-SNE (t-distributed stochastic neighbor embedding)

- Artificial General Intelligence firstly mentionned by Goertzel and Pennachin

2009

first self-driving car from Google on California’s streets

2011

AI beats human in Game Show & Siri comes to life

- IBM Watson defeats two champions in the knowledge-based game show „Jeopardy!“. AI proves that it can understand natural language and answer precise questions

- Apple implements Siri in its products

2015

Progress in autonomous driving and gaming AI

- First autonomous truck from Daimler on German highway

- Google has already collected 1,6 Mio driven kms and drives now in cities

- Deepmind’s AI „AlphaGo“ defeats world’s champions in complicated board game „Go“

2017

Researcher of the University of Washington create an fake Obama speech via a lip-syncing AI

2018

Revelation that Amazon used Recruiting AI since 2014 which suffers from bias towards women

2018

AI can detect several diseases

2017-2019

Fast progress in Text-To-Speech Systems

Google and Deepmind are developing with Tacotron 2 realistic text-2-speech systems based on Neural Networks

2019

Microsoft invests 1 bn $ in OpenAI to boost research on Artificial General IntelligenceMicrosoft invests 1 bn $ in OpenAI to boost research on Artificial General Intelligence

This relatively small snapshot out of the whole history of AI doesn’t intend to be complete. It serves as a general orientation for the reader to grasp the speed and varity of approaches being developed so far.

As a resumee, we can constat that AI is a fairly new field of research having gone through periods of general enthousiasm as well as some phases of deception. With the current development and advancement achieved in hardware performance an overall acceleration of progess is palpable.

After having read this article a main understanding of AI should now be present and it is easier for the reader to classify the following articles in this much bigger framework called AI.

Sources

- Atzori, L., Iera, A. und Morabito, G. (2010), The Internet of Things: A survey, in: Computer Networks, 54, 2010, 15, S. 2787-2805

- Bhatia, Richa (2018): How Do Machine Learning Algorithms Differ From Traditional Algorithms? Hg. v. Analytics India Magazine. Online verfügbar unter https://www.analyticsindiamag.com/how-do-machine-learning-algorithms-differ-from-traditional-algorithms/.

- Cisco (2019): Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update, 2017–2022. Hg. v. Cisco public.

- Columbus, Louis (2018): Roundup Of Cloud Computing Forecasts And Market Estimates, 2018. Hg. v. Forbes Media. Online verfügbar unter https://www.forbes.com/sites/louiscolumbus/2018/09/23/roundup-of-cloud-computing-forecasts-and-market-estimates-2018/#1f9314bc507b.

- Evans, Dave (2012): The Internet of Everything. How More Relevant and Valuable Connections Will Change the World. Hg. v. Cisco IBSG.

- Fleisch, E., Weinberger, M. und Wortmann, F. (2014), Business Models and the Internet of Things – Whitepaper, published by Bosch Internet of Things & Services Lab and University St. Gallen

- Lueth, Knud Lasse (2018): State of the IoT 2018: Number of IoT devices now at 7B – Market accelerating. Hg. v. IoT Analytics. Online verfügbar unter https://iot-analytics.com/state-of-the-iot-update-q1-q2-2018-number-of-iot-devices-now-7b/, zuletzt aktualisiert am 08.08.2018.

- Perera, C., Zaslavsky, A., Christen, P. und Georgakopoulos, D. (2014), Context Aware Computing for The Internet of Things: A Survey, in: IEEE Communica-tions Surverys & Tutorials, 16, 2014, 1, S. 414-454

- Porter, M.E. und Heppelmann, J.E. (2014), How Smart, Connected Products Are Transforming Competition, in: Harvard Business Review, 92, 2014, 11

- Poniszewska-Maranda, Aneta; Kaczmarek, Daniel (2015): Selected methods of artificial intelligence for Internet of Things conception. In: Proceedings of the Federated Conference on Computer Science and Information Systems (5), S. 1343–1348.